One of the great announcements from this year's Microsoft Connect() conference was YAML support for VSTS build

definitions.

For

me, it's a great way forward towards "codifying" the build pipeline.

The current TFS builds technology, introduced in Team Foundation Server 2015,

despite all the benefits of a loose and extensible mechanism is rather

difficult to maintain as code and doesn't really fit "pipeline as a

code" definition. If you remember, earlier versions of Team Foundation

Server (TFS 2005 and TFS 2008) used an MSBuild file to run builds. Whilst this

was easy to code and maintain, extensibility was rather limited. Then, Team

Foundation Server 2010 introduced XAML builds with better support for workflows

but was difficult to work with. TFS 2015 simplifies XAML but the whole logic is

spread across different aspects of the build. Yaml solves this shortcoming

nicely.

Enable YAML Builds Preview Feature

At

the time of writing this post, support for YAML builds is still in preview in

VSTS. To enable it for your account, click on your profile and select option

"Preview Features" from the drop down menu

Select

option "from this account [projectName]".

Scroll down till you find the "Build Yaml definitions" feature and

set it to On

We are now ready to use YAML builds.

Creating a Yaml Build

There

are two ways in which we can set up a Yaml build.

1)

Create a file called .vsts-ci.yaml. When you push your change with this file to

TFS, a build definition, using this file is created for you.

2)

Explicitly create a build definition using the YAML template, providing the

path of YAML file that you have committed in the repo.

We

will go with option 2.

Create build.yml file

YAML

file format is the format of choice for configuration files and is used by some

exciting technologies like docker, Ansible, etc. It's great that VSTS now

supports it as well.

For

this demo, we are creating build for a simple .Net Web Application. For the

purpose of building our application, we need to do the following

1)

Restore all NuGet packages

2)

Build the entire solution

Our

very simple yaml file looks as below

steps:

- task: nugetrestore@1

displayName: NuGet Restore

inputs:

projects: "MyWebApplication.sln"

- task: MSBuild@1

displayName: Building solution

inputs:

command: build

projects: "MyWebApplication.sln"

The

file is pretty much self descriptive. As you can see we we have two tasks. The

first task uses nugetrestore passing the solution as input. The second task

executes MSBuild passing the applicatino's solution.

Commit

the file to your local Git Repo and push to commit to TFS.

Creating the Build definition

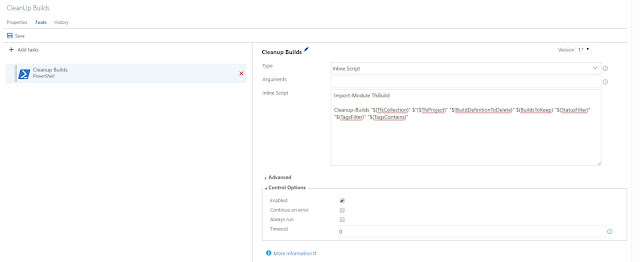

Now

that our yaml file is committed, we will create a build definition to use it.

To do this, click on the New button to create build definition. For the build

template, select YAML and click Apply

We

will then be asked to provide the build name, agent queue and path to Yaml

file. Make sure, you have selected the correct repository and branch in the

"Get Sources" option for the build definition.

Please

note that YAML builds are only supported for Git are not supported when TFVC is

used a version control repository.

Click

on the Triggers tab to make sure that Continuous Integration is selected as

option

Click

save to save your build. Now this build is set up as a continuous integration

build for your repository and is triggered with every commit.

Conclusion:

If

you compare the amount of work you had to do to create yaml build, it's really

a breeze as compare to TFSBuilds. There are many use cases of using YAML for

your build definitions. You can set up a complete pipeline, decoratively

executing each steps which can be developed and tested locally before being

used in VSTS.