Unless you have been living under a rock in the last few years, you would have heard of Microservices. If you don't quite know

Microservices are autonomous services with a bounded context, are fully self-contained and have their own release cadence.

In other words, Microservices can be created, deployed and released independently. Their independence enables quick turnaround. Teams can release one version after another of an ever improving service without depending upon or worrying about anybody else. A microservice essentially own its own data store, CI/CD process, deployment and everything that goes with it. A team can choose its own technology stack and build something independent of other builds.

What about "code reuse"

Reusability has always been a fundamental principle in software engineering and one way of reusability is code reuse. Most often this is achieved using code libraries. In the last 20 years, I have seen an evolution of technology for creation and consumption of code libraries from C++ libraries to COM and ActiveX objects to JAR files and .NET libraries to NuGet packages and Node modules. Regardless of technology, common libraries has always been around and using them has been a desirable thing.

However, the general default advise in a Microservice architecture is

"Don’t share common libraries between Microservices."

Why? Because, common libraries creates coupling.

As an example, consider a team let's say "Team A" created a brilliant library to make web requests when they created "Microservice A". Another team "Team B" started creating "Microservice B" and decided to use library created by "Team A" to make web requests. All good so far. In the 2nd iteration, they realized that they needed to enhance the web request library. Now, they would either need Team A to update it for them or do it for them. If they change the library, it would have an impact on "Microservice A" as well. This is undesirable.

Is Shared Libraries between Microservices a definite No-no?

Well… there are differing opinions on this. There is an apparent conflict between the Don't Repeat Yourself (DRY) and Loose Coupling principle. Some pundits argue overwhelmingly not to use any shared libraries while others consider it a bad advice. There is plenty of discussion on the topic to sway you one way or another.

Here is my take on it

Using shared libraries between Microservices is fine as long as the following conditions are met:

1. Not Domain Specific:

The common library should NOT contain any domain specific code. In other words, it is Ok, if you are using code libraries to reuse technical code e.g. a common code to make http requests. If you think about it, we do it already by using platform libraries for doing things like database connectivity, file I/O, etc. However, if your shared library contains some business logic than it will cause problems. Remember microservices use bounded context.

2. Use Package Manager:

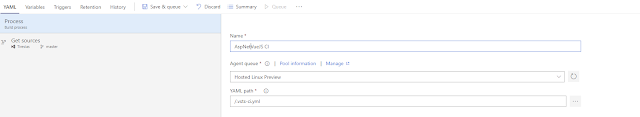

The shared libraries must be published and consumed using a package manager. Package managers such as NPM or NuGet ensures that dependencies are on a particular version of library. It means that when a later newer of library is published, the consumer service don't need to update unless it wants to use functionality in the newer version.

3. Small library with Single Responsibility:

Over years I have seen the menace of "core" or "common" libraries where any reusable code segment is put in a single common library. The problem with that is that the common library touches far too many points and is always required to be updated. For shared libraries between microservices, the shared libraries must be small and do a single job.

4. Avoid Transitive Dependencies:

If your common library is dependent upon another library, which in turn is dependent upon another library, it would create dependencies, which are difficult to map and maintain. In this case, I would say that it's better to duplicate the code than to create a dependency hell of using inter-dependent libraries.

1. Not Domain Specific:

The common library should NOT contain any domain specific code. In other words, it is Ok, if you are using code libraries to reuse technical code e.g. a common code to make http requests. If you think about it, we do it already by using platform libraries for doing things like database connectivity, file I/O, etc. However, if your shared library contains some business logic than it will cause problems. Remember microservices use bounded context.

2. Use Package Manager:

The shared libraries must be published and consumed using a package manager. Package managers such as NPM or NuGet ensures that dependencies are on a particular version of library. It means that when a later newer of library is published, the consumer service don't need to update unless it wants to use functionality in the newer version.

3. Small library with Single Responsibility:

Over years I have seen the menace of "core" or "common" libraries where any reusable code segment is put in a single common library. The problem with that is that the common library touches far too many points and is always required to be updated. For shared libraries between microservices, the shared libraries must be small and do a single job.

4. Avoid Transitive Dependencies:

If your common library is dependent upon another library, which in turn is dependent upon another library, it would create dependencies, which are difficult to map and maintain. In this case, I would say that it's better to duplicate the code than to create a dependency hell of using inter-dependent libraries.

Ownership Dilemma

As I mentioned, microservices are fully autonomous. The team who create them own them and are responsible for everything about them from the choice of technology to deployment and release process. What about shared libraries? If "Team A" creates a shared library that is used by "Team B", which team would own the library? This is indeed a dilemma, especially in cases where the shared library needs to be modified. If Team B needs the change to be made, would they make the change or whether they would ask Team A to make the change. In either case, there is dependency between teams.

From experience, my stance on it is that the team who writes the shared library owns the library. If they require change in the library for their service, they should make the change. However, if other teams want changes in the shared library, they should rather drop the dependency, duplicate the code and do it within the service (or create another library). I agree that it cause effort duplication but in my experience it works much better than modifying the library for a purpose that it wasn't written for in the first place.

Then there is Composite

Microservices do not have dependencies on other microservices. To provide business value, there would be something the brings the services together. It could be a web page for a web application, web form for a windows app or an API gateway for public web APIs. Regardless of its type, it acts as composite. How are shared libraries resolved in a composite. As an example, let's say that services A and B both use CommonLib but Service A uses a different version of CommonLib. The answer to this question is within the definition of micro-service itself. Microservices are self-contained and would bring in an deploy everything that needs to run them themselves. Even if Service A and B are merely separate processes on the same machine, they would load different versions of common library in their process space. The composite does not need to worry about internal intricacies of services.

And finally

As a disclaimer, this post is purely my own opinion based on my experience of working with microservices and in decomposing monoliths. If you follow these general guidance, you will find yourself with a large number of very small libraries, which are not changed very often. Both of these traits are good things. Also, from my experience it is easier to follow these rules if you are starting fresh. If you are starting with a huge monolith, you would most certainly start with a huge common library. Getting clarity on dependencies while working backwards need finer skills.